|

| |

Released At :

Wired AI Ninja Compo 2023

Achievements :

C64 Demo Competition at Wired AI Ninja Compo 2023 : #2

Credits :

SIDs used in this release :

Download :

Look for downloads on external sites:

Pokefinder.org

Summary

Submitted by spider-j on 25 December 2023

Scrolltext:

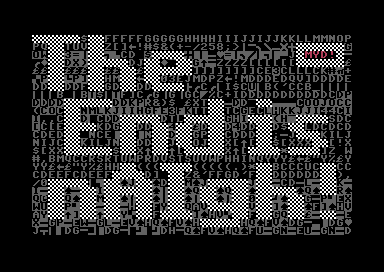

The End is nAIgh!

The Luddites were members of a 19th-century movement of English textile workers

which opposed the use of certain types of cost-saving machinery, often by

destroying the machines in clandestine raids. They protested against

manufacturers who used machines in "a fraudulent and deceitful manner" to

replace the skilled labour of workers and drive down wages by producing inferior

goods.

Many Luddite groups were highly organized and pursued machine-breaking as one of

several tools for achieving specific political ends. In addition to the raids,

Luddites coordinated public demonstrations and the mailing of letters to local

industrialists and government officials. These letters explained their reasons

for destroying the machinery and threatened further action if the use of

"obnoxious" machines continued.

One of the first major contemporary anti-technological thinkers was French

philosopher Jacques Ellul. In his The Technological Society (1964), Ellul argued

that logical and mechanical organization "eliminates or subordinates the natural

world." Ellul defined technique as the entire totality of organizational methods

and technology with a goal toward maximum rational efficiency. According to

Ellul, technique has an impetus which tends to drown out human concerns: "The

only thing that matters technically is yield, production. This is the law of

technique; this yield can only be obtained by the total mobilization of human

beings, body and soul, and this implies the exploitation of all human psychic

forces."

Neo-Luddism calls for slowing or stopping the development of new technologies.

Neo-Luddism prescribes a lifestyle that abandons specific technologies, because

of its belief that this is the best prospect for the future.

In 1990, attempting to reclaim the term 'Luddite' and found a unified movement,

Chellis Glendinning published her "Notes towards a Neo-Luddite manifesto". She

argues in favor of the "search for new technological forms" which are local in

scale and promote social and political freedom.

Feeding an AI system requires data, the representation of information. Some

information, such as gender, age and temperature, can be easily coded and

quantified. However, there is no way to uniformly quantify complex emotions,

beliefs, cultures, norms and values. Because AI systems cannot process these

concepts, the best they can do is to seek to maximize benefits and minimize

losses for people according to mathematical principles. This utilitarian logic,

though, often contravenes what we would consider noble from a moral standpoint —

prioritizing the weak over the strong, safeguarding the rights of the minority

despite giving up greater overall welfare and seeking truth and justice rather

than telling lies.

The fact that AI does not understand culture or values does not imply that AI is

value-neutral. Rather, any AI designed by humans is implicitly value-laden. It

is consciously or unconsciously imbued with the belief system of its designer.

Biases in AI can come from the representativeness of the historical data, the

ways in which data scientists clean and interpret the data, which categorizing

buckets the model is designed to output, the choice of loss function and other

design features. A more aggressive company culture, for example, might favor

maximizing recall in AI, or the proportion of positives identified as positive,

while a more prudent culture would encourage maximizing precision, the

proportion of labelled positives that are actually positive. While such a

distinction might seem trivial, in a medical setting, it can become an issue of

life and death: do we try to distribute as much of a treatment as possible

despite its side effects, or do we act more prudently to limit the distribution

of the treatment to minimize side effects, even if many people will never get

the treatment? Within a single AI model, these two goals can never be achieved

simultaneously because they are mathematically opposed to each other. People

have to make a choice when designing an AI system, and the choice they make will

inevitably reflect the values of the designers.

Take responsibility, now.

|

|

|

|

| Search CSDb |

|

| Navigate |  |

|

| Detailed Info |  |

|

| Fun Stuff |  |

· Goofs

· Hidden Parts

· Trivia (1)

|

|

| Forum |  |

|

| Support CSDb |  |

|

|  |

|